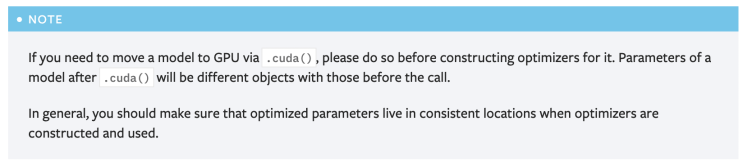

resume 을 구현하기 위해 저장한 optimizer 를 불러왔는데 이런 오류가 있었다.. ... RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cuda:0 optimizer 에도 .cuda() 를 해줘야하나...고민하던 차에 공식 문서를 보고 알았다. torch.optim — PyTorch master documentation Constructing it To construct an Optimizer you have to give it an iterable containing the parameters (all should be Variable s) to optimize. Then, you can specify optimizer-specific options such as the learning rate, weight decay, etc. Note If you ...

#pytorch

#runtimerror

#딥러닝

#파이토치

원문링크 : RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cuda:0

![[윈도우] 자동 복구에서 PC를 복구하지 못했습니다](https://mblogthumb-phinf.pstatic.net/MjAyMDEwMDlfNzQg/MDAxNjAyMjA0MzczMzkz.xP_pH5oodsxlk3DSaXKNpqz8uyiPOafj-KCrfSL-XMcg.GcdAhUiFUnctYa8F_V4jnAlKwZyrpDN7GadmEiK8RaIg.PNG.mdsd12/image.png?type=w2)

네이버 블로그

네이버 블로그 티스토리

티스토리 커뮤니티

커뮤니티