![[논문 리뷰] Big Bird: Transformers for Longer Sequences [논문 리뷰] Big Bird: Transformers for Longer Sequences](http://img1.daumcdn.net/thumb/R800x0/?scode=mtistory2&fname=https%3A%2F%2Fblog.kakaocdn.net%2Fdn%2FbwWPlW%2Fbtr0frXBIeE%2FXMbPRWk5KdaDkarjUtMaTk%2Fimg.png)

이번 게시물에서는 Big Bird를 제시한 논문인 Big Bird: Transformers for Longer Sequences에 대해 다뤄보도록 하겠다. 해당 논문은 2020년도 NeurIPS에 소개되었다. 원문 링크는 다음과 같다. Big Bird: Transformers for Longer Sequences Transformers-based models, such as BERT, have been one of the most successful deep learning models for NLP. Unfortunately, one of their core limitations is the quadratic dependency (mainly in terms of memory) on the sequen..

원문링크 : [논문 리뷰] Big Bird: Transformers for Longer Sequences

![[JavaScript] JavaScript의 객체 [JavaScript] JavaScript의 객체](http://img1.daumcdn.net/thumb/R800x0/?scode=mtistory2&fname=https%3A%2F%2Ft1.daumcdn.net%2Ftistory_admin%2Fstatic%2Fimages%2FopenGraph%2Fopengraph.png)

![[논문 리뷰] Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks [논문 리뷰] Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks](http://img1.daumcdn.net/thumb/R800x0/?scode=mtistory2&fname=https%3A%2F%2Fblog.kakaocdn.net%2Fdn%2Fcu77eM%2FbtscQaJ0rOg%2FoCvPXGa1z6vuqfZrs7fvTK%2Fimg.png)

![[논문 리뷰] Attention is all you need - transformer란? [논문 리뷰] Attention is all you need - transformer란?](http://img1.daumcdn.net/thumb/R800x0/?scode=mtistory2&fname=https%3A%2F%2Fblog.kakaocdn.net%2Fdn%2FbUpk9h%2FbtrSJxEMBiv%2FR6KGb5SxKKqZadbZZYV2d1%2Fimg.png)

![[알고리즘] 정렬 알고리즘(Sorting algorithm) (1) [알고리즘] 정렬 알고리즘(Sorting algorithm) (1)](http://img1.daumcdn.net/thumb/R800x0/?scode=mtistory2&fname=https%3A%2F%2Fblog.kakaocdn.net%2Fdn%2FbKsLwh%2FbtrHsEWmQUC%2FnSqYAkMw1QPLETMNKBHOcK%2Fimg.png)

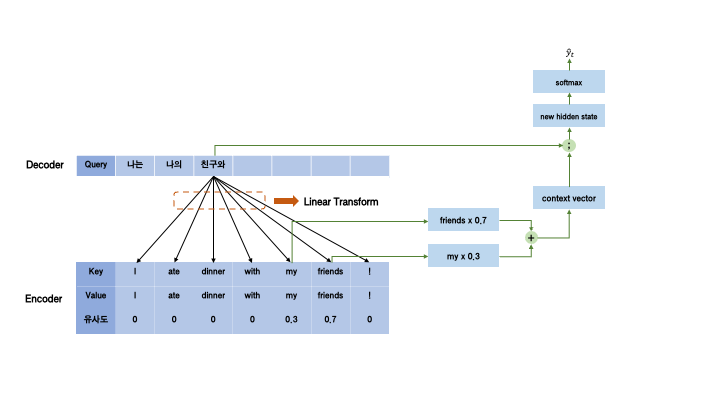

![[논문 리뷰] Neural Machine Translation by Jointly Learning to Align and Translate - Bahdanau Attention [논문 리뷰] Neural Machine Translation by Jointly Learning to Align and Translate - Bahdanau Attention](http://img1.daumcdn.net/thumb/R800x0/?scode=mtistory2&fname=https%3A%2F%2Fblog.kakaocdn.net%2Fdn%2FAms1H%2FbtrSKuVeg6Z%2FcoMuo26iQdCJo84POyKrrK%2Fimg.png)

![[논문 리뷰] GLUE: A Multi-Task Benchmark and Analysis Platform for Natural Language Understanding - GLUE [논문 리뷰] GLUE: A Multi-Task Benchmark and Analysis Platform for Natural Language Understanding - GLUE](http://img1.daumcdn.net/thumb/R800x0/?scode=mtistory2&fname=https%3A%2F%2Fblog.kakaocdn.net%2Fdn%2FEeiqp%2FbtrTA66pOe5%2FuKll6eklhDBc5w8KMiV7pk%2Fimg.png)

네이버 블로그

네이버 블로그 티스토리

티스토리 커뮤니티

커뮤니티