![[Foundation Model][Large Language Model] GPT-NeoX-20B [Foundation Model][Large Language Model] GPT-NeoX-20B](http://img1.daumcdn.net/thumb/R800x0/?scode=mtistory2&fname=https%3A%2F%2Ft1.daumcdn.net%2Ftistory_admin%2Fstatic%2Fimages%2FopenGraph%2Fopengraph.png)

GPT-NeoX-20B https://huggingface.co/EleutherAI/gpt-neox-20b EleutherAI/gpt-neox-20b · Hugging Face GPT-NeoX-20B is a 20 billion parameter autoregressive language model trained on the Pile using the GPT-NeoX library. Its architecture intentionally resembles that of GPT-3, and is almost identical to that of GPT-J- 6B. Its training dataset contains a multi huggingface.co Transformer(Decoder)-based ..

원문링크 : [Foundation Model][Large Language Model] GPT-NeoX-20B

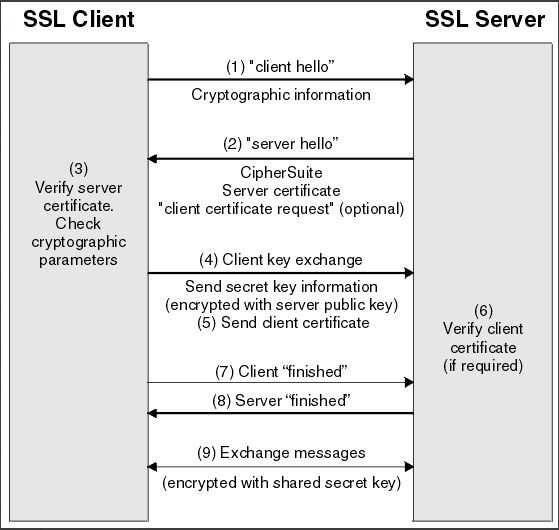

![[Security] 보안 요구사항 분석(Analyzing Security Requirements) [Security] 보안 요구사항 분석(Analyzing Security Requirements)](http://img1.daumcdn.net/thumb/R800x0/?scode=mtistory2&fname=https%3A%2F%2Fblog.kakaocdn.net%2Fdn%2F77DEz%2FbtrA54S4QZx%2FrHnmFxTCJ5o5fILnuzDR9K%2Fimg.png)

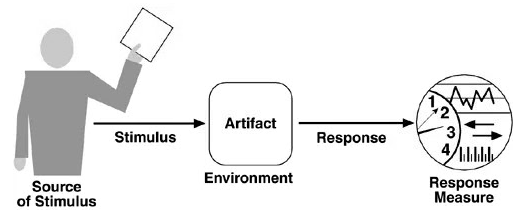

![[Machine Learning] Data Mining [Machine Learning] Data Mining](http://img1.daumcdn.net/thumb/R800x0/?scode=mtistory2&fname=https%3A%2F%2Fblog.kakaocdn.net%2Fdn%2FuOv1a%2FbtrNQroLo7H%2FlqO02fseVobBBtMEz4M5dK%2Fimg.jpg)

네이버 블로그

네이버 블로그 티스토리

티스토리 커뮤니티

커뮤니티