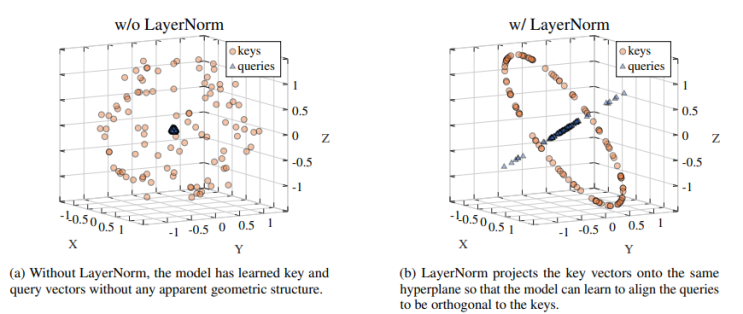

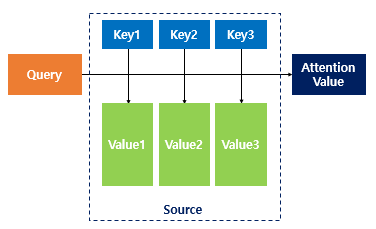

제목 : On the Expressivity Role of LayerNorm in Transformers’ Attention 저자 : Shaked Brody , Uri Alon, Eran Yahav On the Expressivity Role of LayerNorm in Transformers' Attention Layer Normalization (LayerNorm) is an inherent component in all Transformer-based models. In this paper, we show that LayerNorm is crucial to the expressivity of the multi-head attention layer that follows it. This is in contrast to the common belief that LayerNorm's only role is to normalize the ac... arxiv.org 논문 쓰는 와중 글...

#AIBasic

#직교

#정규화

#인공지능기초

#이미지정규화

#이미지

#스케일링

#기초

#Transformer

#Projection

#Normalization

#Norm

#LayerNorm

#ComputerVision

#Basic

#트랜스포머

원문링크 : LayerNorm in Transformer

네이버 블로그

네이버 블로그 티스토리

티스토리 커뮤니티

커뮤니티